A new serverless development project can be doomed from the start if it is launched using antiquated procedures and techniques. As a result, a substantial mental adjustment is needed. The six AWS Well-Architected Framework pillars are covered in this article, along with implementation strategies for secure, effective serverless systems.

Unpopular opinion: Developers should rethink their approach when planning and creating serverless applications.

Popular opinion: Enterprises have a growing need to upgrade their apps and methods for delivering digital experiences to millions of people. Serverless is one of these strategies.

To increase agility and reduce overall operational overhead and costs, IT CEOs are currently reevaluating their strategy. They had to reevaluate how they might create serverless in a way that was efficient, simple, and perfect.

Statelessness and ephemerality are features of AWS Lambda functions, which serve as the backbone of serverless software developed on AWS. They use infrastructure handled by AWS for their operations. This architecture can support and power a wide range of application workflows.

All of these elements compelled us to reevaluate the best way to create serverless applications. How can their dependability be increased while latencies are cut? How can a robust platform be built to combat errors and enforce security regulations? Everything without having to maintain complicated gear.

This article introduces best practices for serverless apps to succeed in cutthroat industries. The procedures follow the precisely constructed, well-architected framework of AWS.

Let's start now!

6 Pillars of AWS Well-Architected Framework

There are several guiding concepts in the AWS Well-Architected Framework. They concentrate on six key components of an application that have a substantial business impact.

Operational Excellence

To provide the capacity to efficiently manage workloads and support development. Moreover, to obtain a greater understanding of the processes. To deliver business value, consistently improve the supporting processes as well.

Security

Data, systems, and assets must be secured and protected. To ensure increased security, systems must utilize cloud technologies to their maximum capacity.

Reliability

Ensuring that tasks carry out their intended purpose accurately and consistently when anticipated.

Performance

To drive workloads and programs and efficiently use computer resources. To fulfil system needs while maintaining peak performance on demand.

Cost optimization

Ensuring that the operating systems provide the company value at the most affordable price.

Sustainability

To take care of your company's long-term effects on the environment, the economy, and society. to assist companies in developing applications, making the most use of resources, and setting sustainable targets.

How to implement these pillars for your serverless application?

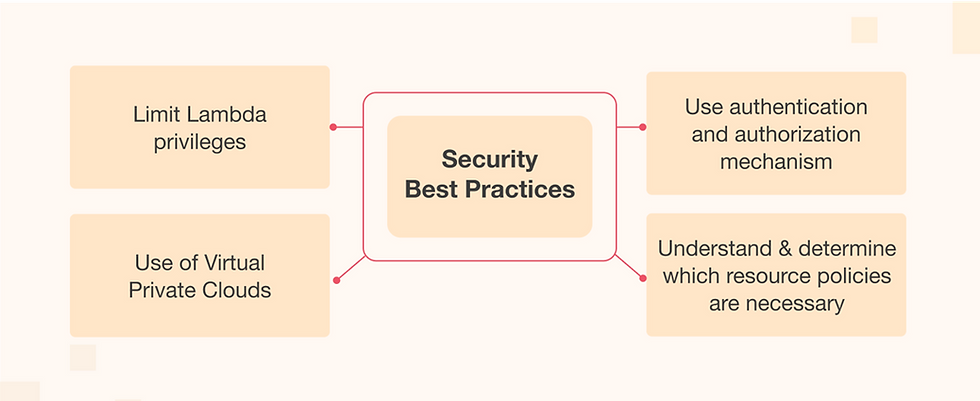

1. Security

Serverless applications are more prone to security problems. Given that these applications are purely dependent on function as a service (FaaS). Additionally, third-party parts and libraries are constantly linked together through networks and events. Because of this, attackers can simply harm your application. Or incorporate it with weak dependencies. l

Organizations can employ various techniques in their daily operations to overcome these challenges and protect their serverless applications from potential dangers.

2. Limit Lambda privileges

Assume you've configured your serverless application on AWS. AWS Lambda functions also help you manage your infrastructure while cutting costs. You can lessen the risk of over-privileged Lambda functions by applying the principle of least privilege. IAM jobs are given by this principle. With this position, you have access to only the resources and services you require to finish the assignment.

For instance, certain tables, like Amazon RDS, want CRUD permission. Whereas some tables want read-only access. These policies thereby restrict the range of authorization to particular actions. If you give unique IAM roles to each Lambda function, securing serverless apps is simple. Additionally, each has a unique set of permissions.

3. Use of Virtual Private Clouds

You can set up an exclusive virtual network to deploy AWS services using Amazon Virtual Private Clouds. It has a wide range of security features for your serverless application. Using security groups to configure virtual firewalls, for instance, to control traffic to and from relational databases and EC2 instances. You can considerably reduce the quantinumberploitable entry points and flaws by utilising PCs. These security gaps and entry points can put your serverless application at risk.

4. Understand and determine which resource policies are necessary

To secure services with slightly restricted access, resource-based constraints might be put into place. These policies, which outline activities and access roles, help protect service components. Use them in accordance with various identities such as source IP address, version, or function event source. These policies are evaluated at the IAM level prior to the implementation of authentication by any AWS service.

5. Use an authentication and authorization mechanism

Utilize strategies for authentication and authorisation to control and manage access to certain resources. This method is recommended for use with serverless APIs in serverless applications by a well-designed framework. These security procedures validate a client's and a user's identity when they are used. It establishes whether they have access to a particular resource. It is straightforward to prevent unwanted users from tampering with service e using this technique.

6. Operational Excellence

Use application, business, and operations metrics

Determine the key performance indicators, such as the results of operations, customers, and business. Additionally, a higher-level view of an application's performance will be rapidly obtained.

Consider how well your application is doing in light of the company's goals. Think of an eCommerce business owner as an example. He may be curious to know the KPIs related to client experience. If you see fewer transactions in your eCommerce application, this highlights how effectively consumers are using the service overall. Additionally, it considers perceived latency, the length of the checkout process, the simplicity of selecting a payment option, etc. You may track the operational stability of your application over time using these operational indicators. You can use a range of operational KPIs to maintain the stability of your app. They may include continuous integration, delivery, feedback, problem-solving time, and other factors.

Understand, analyze, and alert on the metrics provided out of the box

Investigate and analyze the AWS services that your application makes use of. AWS services, for example, provide a set of common metrics out of the box. The metrics help you monitor the performance of your applications. Services generate these metrics automatically. All you have to do now is start tracking your application and creating your unique mcs. Determine which AWS services are used by your application. Take, for example, an airline reservation system. AWS Lambda, AWS Step Functions, and Amazon DynamoDB are all used. When a customer requests a booking, these AWS services now reveal metrics to Amazon CloudWatch. It accomplishes this without impairing the application's performance.

Reliability

High availability and managing failures

Periodic failures are a real possibility for systems. When one server is dependent on another, the chances increase. Although the systems or services do not fail completely, finally experience partial failures. As a result, applications must be designed to be resilient to such component failures. The application architecture should be able to detect faults as well as self-heal.

Transaction failures, for example, can occur when one or more components are unavailable. When there is a lot of traffic, for example. AWS Lambda, on the other hand, is designed to handle faults and is fault tolerant. If the service has trouble calling a specific service or function, it invokes the function in a different Availability Zone. Another example of understanding reliability is the use of Amazon Kinesis and Amazon DynamoDB data streams. While reading from these two data sources, Lambda makes several attempts to read the entire batch of objects. These retries are repeated until the records expire or reach the maximum age specified on the event source mapping. In this configuration, a failed batch can be split into two batches using event sourcing. Smaller batch retries isolate corrupt records while avoiding timeout issues.

It's a good idea to evaluate the response and deal with partial failures programmatically when non-atomic actions occur. For instance, if at least one record has been successfully ingested. Then, for Kinesis, PutRecords, and DynamoDB, BWriteItem both return a successful result.

Throttling

This method can be used to keep APIs from receiving too many requests. Amazon API Gateway, for example, throttles calls to your API. It limits the number of queries a client can submit in a given time frame. These restrictions apply to all clients that use the token bucket algorithm. API Gateway limits both the steady-state rate and the burst rate of requests submitted. With each API request, a token is removed from the bucket. Throttle bursting determines the number of concurrent requests. This strategy maintains system performance and minimises system degradation by restricting excessive API use. Take, for example, a large-scale, global system with millions of users. Every second, it receives a large number of API calls. It's also critical to handle all of these API queries, which can cause systems to lag and perform poorly.

Performance Excellency

Reduce cold starts

Cold starts account for less than 0.25 percent of AWS Lambda requests. Nonetheless, they have a substantial impact on application performance. On occasion, code execution can take up to 5 seconds. However, it is more common in large, real-time applications that operate and must be executed in milliseconds.

AWS's well-architected best practices for performance recommend reducing the number of cold starts. You can accomplish this by taking into account several factors, including how quickly your instances begin. This is determined by the languages and frameworks used. Compilated languages, for example, launch faster than interpreted languages. Programming languages such as Go and Python, for example, are faster than Java and C#.

As an alternative, aim for fewer functions that allow for functional separation. Finally, it is necessary to import the libraries and dependencies required for application code processing. Instead of the entire AWS SDK, you can import specific services. You can import Amazon DynamoDB, for example, if your AWS SDK includes it.

Integrate with managed services directly over functions

It is beneficial to use native integrations among managed services. When no custom logic or data transformation is required, use Lambda functions instead. Native integrations aid in achieving optimal performance while using the fewest resources to manage.

Consider the Amazon API Gateway. You can connect to other AWS services natively using the AWS integration type. You can also use VTL, direct integration with Amazon Aurora, and Amazon OpenSearch Service when using AWS AppSync.

Serverless architecture is gaining traction in the tech industry because it reduces operational costs and complexity. Many experienced tech professionals have shared their knowledge of serverless application development best practices.

Cost Optimization

Required practice

Minimize external calls and function code initialization. Understanding the significance of function initialization is critical. Because when a function is called, it imports all of its dependencies. If your application uses multiple libraries, each library you include slows it down. It is critical to reduce external calls and eliminate dependencies whenever possible. Because it is impossible to avoid them for operations such as machine learning and other complex functionalities.

Recognize and limit the resources that your AWS Lambda functions use while they are running. It could have an immediate impact on the value provided per invocation. As a result, it is critical to reduce reliance on other reducing services and third-party APIs. Functions may use application dependencies on occasion. However, it may not be suitable for ephemeral environments.

Review code initialization

AWS Lambda reports the time it takes to initialize application code in Amazon CloudWatch Logs. These metrics should be used to track prices and performance. Lambda functions are billed based on the number of requests made and the amount of time spent.

Examine your application's code and dependencies to reduce overall execution time. You can also make calls to resources outside of the Lambda execution environment and use the responses in subsequent invocations. In some cases, we recommend using TTL mechanisms within your function handler code. This method allows you to collect unstable data in advance without having to make additional external calls, which would increase execution time.

Sustainability

The final and recently introduced AWS sixth pillar is about sustainability. However, it, like the other pillars, includes questions that assess your workload. It assesses the design, architecture, and implementation while minimizing energy consumption and increasing efficiency.

When compared to a typical on-premises deployment, AWS customers can save nearly 80% on energy. It is due to the capabilities AWS provides its customers in terms of higher server utilisation, power and cooling efficiency, custom data center design, and ongoing efforts to power AWS operations with 100% renewable energy by 2025.

AWS sustainability means facilitating certain design principles while developing your cloud application:

Its purpose is to comprehend and assess business outcomes related to sustainability. Performance indicators must be established, and improvements must be evaluated.

AWS emphasizes and enables workload right-sizing to maximize energy efficiency.

It suggests establishing long-term goals for each workload Create a ROI model and an architecture to reduce impact per unit of work. For example, per-user or per-operation to achieve granular sustainability.

AWS advises continuously evaluating your hardware and software choices for efficiency and flexibility, as well as selecting flexible technologies over time.

Reduce the infrastructure required to support a broader range of workloads by utilizing shared, managed services.

Reduce the number of resources or energy required to use your services. And make it less necessary for your customers to upgrade their devices.

Six Pillars, one review!

This blog introduced best practices for implementing serverless applications using the AWS Well-Architected Framework. We also saw some examples that made following the framework appear simple.

But what if you want to know if your current applications and workloads are properly placed? Or are they implementing best practices (or at least some of them) after the remediation stage? Then, make contact with experienced AWS professionals. And, once your application has been scanned for a well-architected review, you will be provided with a step-by-step roadmap. It will recommend ways to improve costs, performance, operational excellence, and other aspects that are important to your company!

Comments